When working on an AWS account it’s sometimes useful to have CLI access to run commands that automate repetitive tasks or to get to pesky resources that don’t show up on the console. If you federate into an AWS account through Identity Center you could simply get the credentials through the portal when selecting an account. If you get console access through other means those credentials are not always available.

Read more...

Til

A few weeks ago, I stumbled upon Excalidraw, which is a whiteboarding/diagramming tool that has a certain aesthetic. I liked the design of the diagrams I saw, so I decided to give it a try. Coming from drawio, I was immediately impressed with how intuitive it felt. After playing around with Excalidraw for an hour, I decided it was worth actually using for work, and so I hosted it locally on my machine. The setup was pretty easy to do; clone the repository, install the alarmingly many dependencies1, build the app, and then grab the static files from excalidraw-app/build and shove them behind a web server.

Read more...

AWS Backup is a service that helps orchestrate, audit, and restore backups within and across AWS accounts. The content of this post are my personal notes on backup plans, and do not reflect the views of my employer.

A core component of the AWS Backup service is the backup plan. The AWS documentation describes a backup plan as:

[…] a policy expression that defines when and how you want to back up your AWS resources.

Read more...

I’ve been spending more time browsing StackOverflow recently and came across a question asking if it was possible to find duplicate objects within an S3 bucket. One way would be to hash the object prior to upload and store the value in a local or remote data store. If that’s not possible or too much overhead, I figured I could use S3 Metadata and Athena to solve this, services I’ve covered on this blog not too long ago. Athena alone has come up a few times this year, just because I’ve been finding interesting use cases for it. While I am an AWS employee, everything I’ve written, and will write, on this blog has always been out of personal interest. There are no sponsored posts here and all opinions are all my own.

Read more...

Amazon S3 Intelligent-Tiering moves your data to the most cost-effective S3 storage tier based on the object’s access pattern for the price of $0.0025 per 1,000 objects it monitors. Since the movement is done by the service you don’t know, or need to know, the access tier the object is currently in as all objects can be retrieved asychronously. If you opt-in for asynchronous archive tiers, you can find out if an object is in one of these tiers by requesting the HEAD of an object. This only works for these opt-in tiers, if you’d like to find out if the object is in the Frequent Access, Infrequent Access or Archive Instance Access tiers you will need to refer to the Amazon S3 Inventory. The S3 Inventory provides a snapshot of your object’s metadata at a daily or weekly frequency, this snapshot also includes S3 Intelligent-Tiering access tier, the key we are interested in.

Read more...

Ben Welsh and Katlyn Alo have created a course that walks through running your first Athena query, complete with sample data. I’ve written about Athena a few times on this blog and the course works as a great primer. This post will act as an addendum to the guide, specifically step 4, where you create an Athena database and table.

A database, in Athena, holds one or more tables, and the table points to the data and what schema it’s in. The creation of these resources in the guide follows a pattern I’ve seen a lot where the database and table are created using SQL through Athena. It’s simple, and does the job, but I’d like to document how to create a table and database using AWS Glue, an extract-transform-load data service, and highlight some comparisons between the two methods.

Read more...

Recently, Simon Willison shared how he uses S3 event notifications with Lambda and DynamoDB to list recently uploaded files from an S3 bucket. The first thought that occurred to me was to use S3 inventory which provides a daily catalog of objects within a bucket queriable through Athena. The second idea involved doing the same with the recently announced S3 metadata feature. Both methods, I discovered, were already commented on by others. In this post, I want to explore the S3 metadata method to get my feet wet with the service.

Read more...

Last month, AWS announced multi-session support natively in the AWS console; previously I’d relied on Firefox containers to separate logins between accounts. This can be easily enabled from the account drop-down in the top right of the console.

Multi-session support enabled and the usual account ID and IAM role is visible in the top right.

Read more...

While trying to test recovery of a service from a backup, as everyone should, I discovered a container image was no longer available from Docker Hub anymore. I was greeted by the following error:

ERROR: The image for the service you're trying to recreate has been removed. If you continue, volume data could be lost. Consider backing up your data before continuing. Continue with the new image? [yN] Since I had a perfectly healthy and running instance of the service on my homelab which I could use to save the image from that I could then include in my backup copy.

Read more...

Data sources are used to retrieve information outside of Terraform, in this case default VPC, subnets, security group and internet gateway resources provisioned in a region within an AWS account. Each opted-in region within an AWS account comes with default network resources, with can be used to provision resources within a default subnet, use the default internet gateway or security group for provisoned resources and more.

Retrieve the default VPC The default VPC can be retrieved using the aws_vpc data source and the default argument. We will use the default VPC ID to retrieve all other default network resources.

Read more...

AWS Identity Center is a service that allows you to manage and control user access to AWS accounts or applications. The user identities and groups can be provisioned from an external Identity Provider, like Okta or Keycloak, or managed directly within IAM Identity Center.

Disclaimer: Do not take the information here as a good or best practice. The purpose of this site is to post my learnings in somewhat real-time.

For the purposes of this post we will only care about managing access to existing AWS accounts, and assume the users and/or groups are already present. This will serve as a reminder for me on what order of operations to each perform tasks.

Read more...

Schedules, events and alarms can be shared using the iCalendar data format regardless of the Calendar service or application like Google Calendar or Outlook. This format is defined in RFC 5545. Creating iCalendar files can be done in Python using the icalendar package. I’ve outlined a few example scenarios below. The package documentation has more details on usage.

Installing the package pip install icalendar Other methods are available in the official documentation.

Read more...

sqlite3 CLI Using the sqlite3 CLI allows you to import CSV files into a new or existing database file. The following multi-line command will import the CSV file, disruptions.csv into the disruptions table. All columns will be of type TEXT if created by the import command.

sqlite3 data.db <<EOS .mode csv .import disruptions.csv disruptions EOS The command infers the input file type by the output mode (.mode csv), otherwise use --csv in the import command.

Read more...

FFmpeg has the ability to stabilise a video using a 2 pass approach. I first learnt about this from a post on Paul Irish’s website and later also shared the link. Their post also includes a useful single command to stabilise and create a comparison video to see the before and after. The purpose of my entry is to add some references and commands that I’ve found useful mostly for me to look up later.

Read more...

Grafana has the ability to use Amazon Athena as a data source allowing you to run SQL queries to visualize data. The Athena table data types are conveniently inherited in Grafana to be used in dashboard panels. If the data types in Athena are not exactly how you’d like them in Grafana you can still apply conversion functions.

In this case the timestamp column in Athena is formatted as a string, and I do not have the ability to adjust the table in Athena (which is normally what you’d want to do). If your Athena table doesn’t have a column in a DATE, TIMESTAMP or TIME format you will not be able to natively use a panel that relies on a timestamp. You can parse the timestamp string using the parse_datetime() function that expects the string, and the format the string is in. This columns will now appear as a timestamp to Grafana.

Read more...

Exporting the results of a query can be useful to import it into other tools for data preparation or visualisation particularly as a CSV file.

sqlite3 CLI Using the SQLite3 CLI allows you to set the query result mode to CSV and output that result to a file. A number of different output formats, including custom separators can be set as well.

Read more...

GitHub Markdown can render Mermaid diagrams including Mermaid Gantt charts. I discovered this from Simon Willison who shared Bryce Mecum’s post on using Mermaid Gantt diagrams to display traces.

A Gantt diagram can be created and rendered within a code block tagged mermaid, a simple example copied from the docs can be seen below.

gantt title A Gantt Diagram dateFormat YYYY-MM-DD section Section A task :a1, 2014-01-01, 30d Another task :after a1, 20d I previously used MarkWhen’s Meridiem online editor to create Gantt charts to visualise the service life on Dutch Sprinter trains, this required me to share screenshots of the chart unless I created a view-only copy. Using GitHub I can share a gist with the details of the chart, which others can then use or modify. The migration from MarkWhen to Mermaid isn’t perfect, but it’s a start.

Read more...

I use python-frontmatter to read and parse the front matter in Markdown files used on the main site. The front matter is used to set page or post titles, along with categories and tags. The python-frontmatter library provides ways to load a file and parse the front matter making it available as a dict. However, there isn’t a documented way to write1 the front matter content to files if you’re starting from a dict.2

Read more...

Dynamically partitioning events on Amazon Data Firehouse is possible using the jq 1.6 engine or using a Lambda function for custom parsing. Using JQ expressions through the console to partition events when configuring a Firehouse stream is straight forward provided you know the JQ expression and the source event schema. I found it difficult translating this configuration into a CloudFormation template after initially setting up the stream by click through the console.

Read more...

sqlite-diffable is a tool, built by Simon Willison, to load and dump SQLite databases to JSON files. It’s intended to be used through the CLI, however since May I’ve been using this tool as a callable Python module to build my main site after making changes to the code1. This has allowed me to combine the build commands into one Python file. The changes aren’t available in the upstream code2 so it’ll have to be pulled from my fork if you’re looking to use this.

Read more...

I usually forget the syntax of defining enviornment variables on different platforms, so here’s a note for future me to look up.1

Bash/Zsh export VARIABLE_NAME=ABC123 Using export will set the environment variable within the current session, you can override the value by using export again on the same variable name. To apply this environment variable to all sessions set the variable within the shell’s startup script such as .bashrc or /etc/envrionment to be available by all users.

Read more...

Within a repository’s settings tab under Security you can set an Actions secrets and variables. A secret is encrypted and created for use with sensitive data whereas a variable is displayed as plain text. I intended to use a repository variable as an environment variable for a Python script within one of my workflows and the GitHub documentation was not very useful in describing how to accomplish that.

Thanks to mbaum0 on this discussion thread I learned that you can access variables with the vars context.1 Using vars I can then set the variable within the environment of the workflow to be used by the Python script. In the example below I have set USERNAME as an Action variable and then set it as an environment variable within the workflow.

Read more...

Leaflet is a popular and feature-rich JavaScript library for displaying maps. One of these features includes creating and placing markers on a map with an icon, that could represent a dropped pin or something custom. I need to create icons for markers that also contain a number that could be different for each marker and adding text to Leaflet icons can be done in a few ways.

Read more...

Cross account access to an S3 bucket is a well documented setup. Most guides will cover creating and applying a bucket policy to an S3 bucket and then creating a policy and role to access that bucket from another account. A user or service from that account can then assume that role, provided they’re allowed to by the roles trust relationship to acccess the S3 bucket via the CLI or API.

Read more...

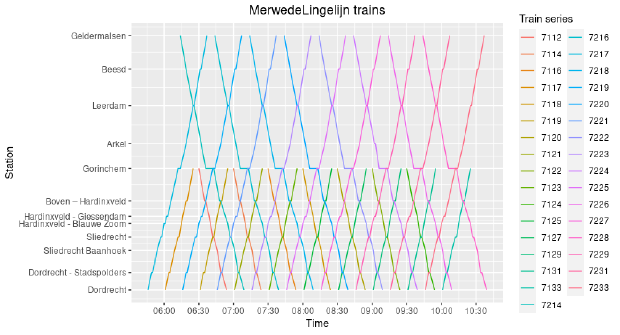

Timetable or schedule graphs visualise railway traffic on a route during a set time. Typically when these graphs are shared online they look like they’ve been created using jTrainGraph although I find it very difficult to get started. I attempted to use both Google Sheets and LibreOffice Calc to some degree of success but only achieved what I wanted through by using R. This was made possible by a single StackOverflow question from 2011.

Read more...

Granting a user permission to access resources across AWS accounts is a common task, typically the account with the resources contains an IAM role with the appropriate policy defining the actions the user can perform. In addition to this a trust policy is created that specifies which principal can assume that role in order to perform the allowed actions. Sometimes an external ID is added to the trust policy which verifies the user wanting to assume that role. There are good reasons to use this, which I will refer to the AWS documentation for.

Read more...

AWS allows you to enable server-side encryption (SSE) for you data at rest in your SQS queue. Disabling this option also has an effect on your encryption in transit as well. From the SQS documentation:

All requests to queues with SSE enabled must use HTTPS and Signature Version 4.

In other words disabling SSE also means you can now communicate to the SQS without TLS.

I wrote a Python script to send a message to a queue using the HTTP API and botocore without using the higher level abstractions of boto3. Note the endpoint used uses http and not https.

Read more...

On my Linux machine I have sometimes shared by screen as a virtual device so it can be used in other programs as a webcam. A similar FFmpeg can also just be used to capture video to the file as well.

Capture as a video device The v4l2loopback module allows you to create a virtual video devices under /dev/.

Read more...

You can track changes to a tag through AWS CloudTrail, AWS Config, or Amazon CloudWatch Events, these methods have already been documented but they’re too slow to respond to changes, too expensive to run, not as extensible out-of-the-box, or outdated. I haven’t seen much coverage on doing this with Amazon EventBridge, which has many integration options, is low-latency, and is fairly low cost (and in this case free). There is a page in the documentation titled Monitor tag changes with serverless workflows and Amazon EventBridge that covers just that, I’d recommend starting there.

Read more...

To show related content on this blog I use Hugo’s in-built functionality, which works surprisingly well with no setup. I did, however, want to test out creating text embeddings from my posts and rank them by similarity. Before you continue reading, the usual disclaimer:

Do not take the information here as good or best practice. The purpose of this entry is to post my learnings in somewhat real-time.

I will use Amazon Titan Embeddings G1 - Text available through Amazon Bedrock and SQLite to store the results. The full code can be found towards the end of this post.

Read more...

It has been 87 days since I enabled the ability to show related content at the bottom of each post on this blog, aptly titled “Related ramblings”. This uses Hugo’s built-in Related Content functionality. If you use a pre-made theme you may never directly work with this feature. I wanted to highlight how easy it was to use and how impressed and I am with the results.

To enable my theme to use this features, I simply had to create a new partial that could be used in my post template. The following snippet uses .Related which takes the current page and finds every related page according to the related configuration. The first 5 are picked and rendered onto the page by referencing this partial. To view the code you can refer to the commit on the theme.

Read more...

There have been a number of occasions where I needed to insert JSON objects into an SQLite database, the sqlite-utils Python library and CLI tool handled the task every time. I will be showcasing some of the JSON-inserting capabilities via CLI below.

Inserting a single JSON object Here the object is stored in data.json, sqlite-utils takes in the insert command, the name of the database, the name of the table, the filename, and lastly, I also specify the column to use as the primary key.

Read more...

To plot rail stations on a map for past blogs1 and toots23 I needed to fetch their longitudinal and latitudinal coordinates. This process can be described as geocoding, getting a coordinates from name or address. There are many powerful libraries out there that have the capability to do this, but I wanted something quick and simple to get started, this is where Nominatim comes in. In their own words:

Nominatim (from the Latin, ‘by name’) is a tool to search OSM data by name and address and to generate synthetic addresses of OSM points (reverse geocoding). It has also limited capability to search features by their type (pubs, hotels, churches, etc).

Read more...

What I like about Sass and other CSS preprocessors, despite my limited usage of them, is the ability to nest CSS elements to assign style rules to children. Earlier this year the first version of the CSS Nesting Module specification was published by W3C. This brings the feature of CSS nesting to the browser! According to CanIUse, nesting is already supported by all major browsers as of this year.

Here’s a basic example, I have an article with a <h1> and <h2> header where I’d like all <h2> headers within the article to be blue.

Read more...

I host all my images outside of Hugo to prevent any large files from residing in my git repository. This has led me to serve high resolution images in most of my posts which aren’t ideal for the end user. I recently learned about Hugos ability to get remote images, aptly titled resources.GetRemote, which then allows me to apply Hugo’s image rendering capabilities.

This video by Eric Murphy covers some compressing and resizing methods within Hugo that I’ve applied to this blog. A detailed list of all the processing options can be found in the Hugo documentation.

Read more...

Update 2023-12-19: Got an update from the issue I raised that the AWS Backup Access Policy and IAM role issue has been resolved in the Terraform AWS Provider version v5.30.0 via this Pull Request thanks to @nam054 and @johnsonaj. They delay has now been added as part of the provider itself and I’ve confirmed it works! You can disregard the rest of this post or continue reading if you’re interested.

I recently came across an InvalidParameterValueException when trying to add a newly created AWS IAM role as a principle within an AWS Backup access policy in Terraform. It worked after applying the Terraform module a second time. After multiple repeated trials I found the module always failed on the first attempt but succeeded on the second. It seemed odd and after an embarrassingly long time searching online, I came across a pattern in the reported errors in the issues on the AWS Terraform Provider repository. These included MalformedPolicyDocument, InvalidPolicy, InvalidParameterValue among others, all related to referencing recently created IAM resources.

Read more...

The following post outlines how to use ffmpeg to take a number of images and create a video with each image present for a set duration with cross fades. The images are centered in the video with a black background. There are some instances when a previous image may be visible if it’s too large, it’s been a while since I tested this thoroughly so it could use some tweaking.

Read more...

Disclaimer: Do not take the information here as a good or best practice. The purpose of this site is to post my learnings in somewhat real-time.

AWS IAM Identity Center (previously and more commonly known as AWS SSO) allows you to control access to your AWS accounts through centrally managed identities. You can choose to manage these identities through IAM Identity Center, or through external Identity Providers (IdPs) such as Okta, Azure AD, and so on. AWS already has good documentation for all these sources. I have my identities on Keycloak which was a little bit difficult to set up for the first time, hence this post.

Read more...

The AWS API allows you to list-rules which returns a list of all the rules but does not list targets. The API also provides you with list-targets-by-rule which allows you to list the targets associated with a specific rule. If you want to find all the rules with a specific target, this case an event bus, you can join both of them together.

No idea if this is acceptable practice, or if I’ll ever use this again, but I will unleash this string of commands and pipes to the world.

Read more...

This post details how to update a domain record entry on Linode based on the public IP of a machine running Linux. We will create a python script and use the Linode API to accomplish this.

Create a personal token in Linode From your Linode console under My Profile > API Tokens you can create a personal access token. The script only requires read/write access to the Domains scope. From here you can also set your desired expiration time.

Read more...

Disclaimer: Do not take the information here as a good or best practice. The purpose of this site is to post my learnings in somewhat real time.

Create an OIDC IdP on AWS This needs to be done once for an AWS account, this configures the trust between AWS and GitHub through OIDC.

Read more...

Install exiftool.

sudo apt install exiftool # sudo apt install libimage-exiftool-perl Remove all tags.

exiftool -all= image.jpg Remove only EXIF tags

exiftool -EXIF= image.jpg

Server and client setup Install Wireguard on both server and client

sudo apt install wireguard Create the public and private key on both server and client. Store the private keys in a secure place.

wg genkey | tee privatekey | wg pubkey > publickey Server configuration Create and open the file /etc/wireguard/wg0.conf. Insert the following block and view the examples on the table below.

Read more...

Recursively deleting all objects in a bucket and the bucket itself can be done with the following command.

aws s3 rb s3://<bucket_name> --force If the bucket has versioning enabled any object versions and delete markers will fail to delete. The following message will be returned.

remove_bucket failed: s3://<bucket_name> An error occurred (BucketNotEmpty) when calling the DeleteBucket operation: The bucket you tried to delete is not empty. You must delete all versions in the bucket. The following set of command deletes all objects, versions, delete markers, and the bucket.

Read more...

Grabbing just an IP address from a network interface can be useful for scripting. In the example below the assumed interface is eth0.

ip a show eth0 | grep "inet " | cut -d' ' -f6 | cut -d/ -f1 You can then save this into a variable and use it in other commands.

local_ip=$(ip a show eth0 | grep "inet " | cut -d' ' -f6 | cut -d/ -f1) python3 -m http.server 8000 --bind $local_ip hugo server --bind $local_ip --baseURL=http://$local_ip

ffmpeg -i input.mkv -ss 00:00:03 -t 00:00:08 -async 1 output.mkv Arugment Description -i Specify input filename -ss Seek start position -t Duration after start position -async 1 Start of audio stream is synchronised See these StackOverflow answers for a debate on various other methods of trimming a video with ffmpeg.

Updating images for containers that are run through docker-compose is simple. Include the appropriate tags for the image value.

docker-compose pull docker-compose up -d You can then delete the old, now untagged image. The following command deletes all untagged images.

docker image prune Rollback to a previous image If you wish to rollback to the previous image, first tag the old image.

Read more...

Install the NFS client pacakge. For distros that use yum install nfs-utils.

sudo apt install nfs-common Manually mount the share in a directory. Replace the following with your own values:

server with your NFS server /data with your exported directory /mnt/data with your mount point sudo mount -t nfs server:/data /mnt/data To automatically mount the NFS share edit /etc/fstab with the following:

# <file system> <mount point> <type> <options> <dump> <pass> server:/data /mnt/data nfs defaults 0 0 To reload fstab verbosely use the following command:

Read more...

EBS sends events to CloudWatch when creating, deleting or attaching a volume, but not on detachment. However, CloudTrail is able to list detachments, the command below lists the last 25 detachments.

aws cloudtrail lookup-events \ --max-results 25 \ --lookup-attributes AttributeKey=EventName,AttributeValue=DetachVolume Setting up noticiations is then possible with CloudWatch alarms for CloudTrail. The steps are summarized below:

Ensure that a trail is created with a log group. Create a metric filter with the Filter pattern { $.eventName = "DetachVolume" } in CloudWatch. Create an alarm in CloudWatch with threshold 1 and the appropriate Action.

Introduction These are tar commands that I use often but need help remembering.

Contents Introduction Create an archive Create a gzip compressed archive Extract an archive Extract a gzip compressed tar archive List files in an archive List files in a compressed archive Extract a specific file from an archive Create an archive tar -cvf send.tar send/ -c Create an archive -v Verbose -f Specify filename Create a gzip compressed archive tar -czvf send.tar.gz send/ -c Create an archive -z Compress archive with gzip -v Verbose -f Specify filename Extract an archive tar -xvf send.tar -x Extract an archive -v Verbose -f Specify filename Extract a gzip compressed tar archive tar -xvzf send.tar -x Extract an archive -v Verbose -z Decompress using gzip -f Specify filename List files in an archive tar -tvf send.tar -t List contents -v Verbose -f Specify filename List files in a compressed archive tar -tzvf send.tar.gz -t List contents -z Decompress using gzip -v Verbose -f Specify filename Extract a specific file from an archive tar -xvf send.tar my_taxes.xlsx scan.pdf -x Extract an archive -v Verbose -f Specify filename :)